Note: If you like these blog posts, please click the +1 !

In some cases, you might need to, so called, crawl a web site to gather keywords or email addresses. Web sites can utilize the use of robots.txt files to prevent simple automated crawling of the entire website or part of it. The robots.txt file gives instructions to web robots about what not allowed on the web site using the Robots Exclusion Protocol. So, if a website contains a robots.txt like:

User-Agent: *

Disallow: /

This robots.txt will disallow all robots from visiting all pages on the web site. So, if a robot would try to visit a web site http://www.domain.topdomain/examplepage.html, then robots.txt in the root of the website http://www.domain.topdomain/robots.txt will not permit the robot to access the website. The robots.txt file can be ignored by many web crawlers, so it should not be used as a security measure to hide information. We should also be able to ignore such a simple security measure to investigate or to test web site security. I have mentioned in previous web posts the tool called wget that is a very useful tool to download a web page, website, or malware from the command line. This simple tool can also be configured to ignore the robots.txt file, but by default, it respects it, so you need to specifically tell the tool to ignore is directions.

wget -e robots=off --wait 1 -m http://domain.topdomain

FINISHED --2014-10-26 11:12:36--

Downloaded: 35 files, 22M in 19s (1.16 MB/s)

While not using the robots=off option will result in the following results.

wget -m http://domain.topdomain

FINISHED --2014-10-26 11:56:53--

Downloaded: 1 files, 5.5K in 0s (184 MB/s)

It is clear to see in this example that we would have missed 34 files by not being familiar with this simple file and its purpose.

Using "User-Agent: * " is a great option to block robots of unknown name blocked unless the robots use other methods to get to the website contents. Let's try and see what will happen if we use wget without robots=off.

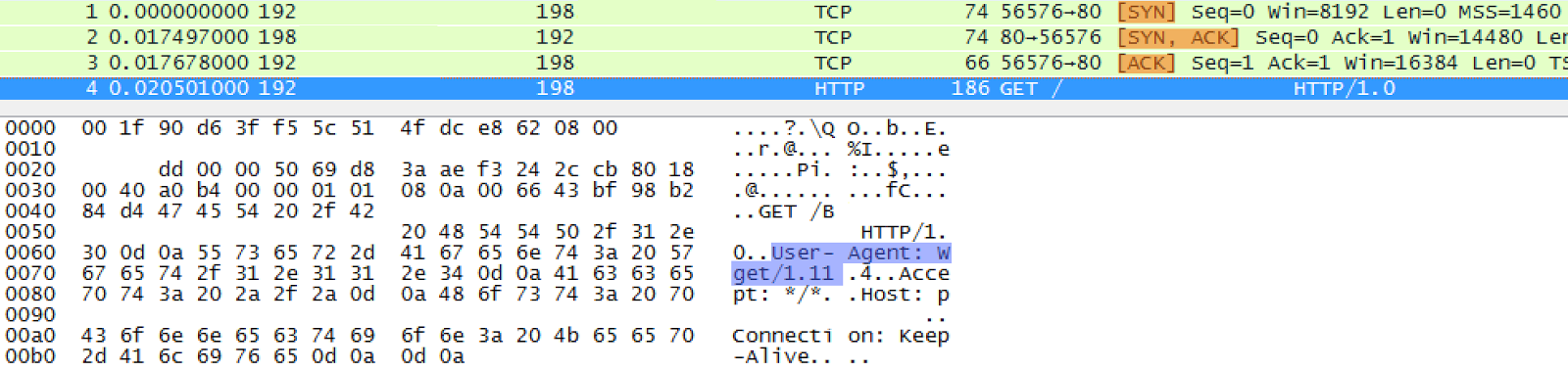

As you can see the User-Agent is set to wget/1.11( default Wget/version ), so as you can see in the list below, a robots.txt with the content list below would catch this utility and prevent it from getting the website contents.

Note: The orange highlighted three packets are the 3-way handshake, so the request for the resources with the User-agent settings is the fist packet following the three-way handshake. That might be a good pattern for alarm settings.

wget also has an option to change the user-agent default string to anything the user wants to use.

wget --user-agent=ZOLTAN -m http://domain.topdomain

As you can see in the packet capture, the user-agent was overwritten as the option promised, but the website still only allowed a single file download due to User-agent: * that captured the unknown string. So, robots.txt can help protecting the website to a certain extent, but the -e robots=off option did get the whole website content even though the packet contained an unmodified User-agent settings.

robots.txt can have specific contents to keep unsafe robots away from a web site or to provide basic protection from these "pests": ( This list is not exhaustive, but it can be a good source to learn about malicious packet contents and a good resource for further reading on each one of these software tools. )

User-agent: Aqua_Products

Disallow: /

User-agent: asterias

Disallow: /

User-agent: b2w/0.1

Disallow: /

User-agent: BackDoorBot/1.0

Disallow: /

User-agent: Black Hole

Disallow: /

User-agent: BlowFish/1.0

Disallow: /

User-agent: Bookmark search tool

Disallow: /

User-agent: BotALot

Disallow: /

User-agent: BuiltBotTough

Disallow: /

User-agent: Bullseye/1.0

Disallow: /

User-agent: BunnySlippers

Disallow: /

User-agent: Cegbfeieh

Disallow: /

User-agent: CheeseBot

Disallow: /

User-agent: CherryPicker

Disallow: /

User-agent: CherryPicker /1.0

Disallow: /

User-agent: CherryPickerElite/1.0

Disallow: /

User-agent: CherryPickerSE/1.0

Disallow: /

User-agent: CopyRightCheck

Disallow: /

User-agent: cosmos

Disallow: /

User-agent: Crescent

Disallow: /

User-agent: Crescent Internet ToolPak HTTP OLE Control v.1.0

Disallow: /

User-agent: DittoSpyder

Disallow: /

User-agent: EmailCollector

Disallow: /

User-agent: EmailSiphon

Disallow: /

User-agent: EmailWolf

Disallow: /

User-agent: EroCrawler

Disallow: /

User-agent: ExtractorPro

Disallow: /

User-agent: FairAd Client

Disallow: /

User-agent: Flaming AttackBot

Disallow: /

User-agent: Foobot

Disallow: /

User-agent: Gaisbot

Disallow: /

User-agent: GetRight/4.2

Disallow: /

User-agent: grub

Disallow: /

User-agent: grub-client

Disallow: /

User-agent: Harvest/1.5

Disallow: /

User-agent: hloader

Disallow: /

User-agent: httplib

Disallow: /

User-agent: humanlinks

Disallow: /

User-agent: ia_archiver

Disallow: /

User-agent: ia_archiver/1.6

Disallow: /

User-agent: InfoNaviRobot

Disallow: /

User-agent: Iron33/1.0.2

Disallow: /

User-agent: JennyBot

Disallow: /

User-agent: Kenjin Spider

Disallow: /

User-agent: Keyword Density/0.9

Disallow: /

User-agent: larbin

Disallow: /

User-agent: LexiBot

Disallow: /

User-agent: libWeb/clsHTTP

Disallow: /

User-agent: LinkextractorPro

Disallow: /

User-agent: LinkScan/8.1a Unix

Disallow: /

User-agent: LinkWalker

Disallow: /

User-agent: LNSpiderguy

Disallow: /

User-agent: lwp-trivial

Disallow: /

User-agent: lwp-trivial/1.34

Disallow: /

User-agent: Mata Hari

Disallow: /

User-agent: Microsoft URL Control

Disallow: /

User-agent: Microsoft URL Control - 5.01.4511

Disallow: /

User-agent: Microsoft URL Control - 6.00.8169

Disallow: /

User-agent: MIIxpc

Disallow: /

User-agent: MIIxpc/4.2

Disallow: /

User-agent: Mister PiX

Disallow: /

User-agent: moget

Disallow: /

User-agent: moget/2.1

Disallow: /

User-agent: mozilla/4

Disallow: /

User-agent: Mozilla/4.0 (compatible; BullsEye; Windows 95)

Disallow: /

User-agent: Mozilla/4.0 (compatible; MSIE 4.0; Windows 2000)

Disallow: /

User-agent: Mozilla/4.0 (compatible; MSIE 4.0; Windows 95)

Disallow: /

User-agent: Mozilla/4.0 (compatible; MSIE 4.0; Windows 98)

Disallow: /

User-agent: Mozilla/4.0 (compatible; MSIE 4.0; Windows ME)

Disallow: /

User-agent: Mozilla/4.0 (compatible; MSIE 4.0; Windows NT)

Disallow: /

User-agent: Mozilla/4.0 (compatible; MSIE 4.0; Windows XP)

Disallow: /

User-agent: mozilla/5

Disallow: /

User-agent: MSIECrawler

Disallow: /

User-agent: NetAnts

Disallow: /

User-agent: NetMechanic

Disallow: /

User-agent: NICErsPRO

Disallow: /

User-agent: Offline Explorer

Disallow: /

User-agent: Openbot

Disallow: /

User-agent: Openfind

Disallow: /

User-agent: Openfind data gathere

Disallow: /

User-agent: Oracle Ultra Search

Disallow: /

User-agent: PerMan

Disallow: /

User-agent: ProPowerBot/2.14

Disallow: /

User-agent: ProWebWalker

Disallow: /

User-agent: psbot

Disallow: /

User-agent: Python-urllib

Disallow: /

User-agent: QueryN Metasearch

Disallow: /

User-agent: Radiation Retriever 1.1

Disallow: /

User-agent: RepoMonkey

Disallow: /

User-agent: RepoMonkey Bait & Tackle/v1.01

Disallow: /

User-agent: RMA

Disallow: /

User-agent: searchpreview

Disallow: /

User-agent: SiteSnagger

Disallow: /

User-agent: SpankBot

Disallow: /

User-agent: spanner

Disallow: /

User-agent: suzuran

Disallow: /

User-agent: Szukacz/1.4

Disallow: /

User-agent: Teleport

Disallow: /

User-agent: TeleportPro

Disallow: /

User-agent: Telesoft

Disallow: /

User-agent: The Intraformant

Disallow: /

User-agent: TheNomad

Disallow: /

User-agent: TightTwatBot

Disallow: /

User-agent: Titan

Disallow: /

User-agent: toCrawl/UrlDispatcher

Disallow: /

User-agent: True_Robot

Disallow: /

User-agent: True_Robot/1.0

Disallow: /

User-agent: turingos

Disallow: /

User-agent: URL Control

Disallow: /

User-agent: URL_Spider_Pro

Disallow: /

User-agent: URLy Warning

Disallow: /

User-agent: VCI

Disallow: /

User-agent: VCI WebViewer VCI WebViewer Win32

Disallow: /

User-agent: Web Image Collector

Disallow: /

User-agent: WebAuto

Disallow: /

User-agent: WebBandit

Disallow: /

User-agent: WebBandit/3.50

Disallow: /

User-agent: WebCopier

Disallow: /

User-agent: WebEnhancer

Disallow: /

User-agent: WebmasterWorldForumBot

Disallow: /

User-agent: WebSauger

Disallow: /

User-agent: Website Quester

Disallow: /

User-agent: Webster Pro

Disallow: /

User-agent: WebStripper

Disallow: /

User-agent: WebZip

Disallow: /

User-agent: WebZip/4.0

Disallow: /

User-agent: Wget

Disallow: /

User-agent: Wget/1.5.3

Disallow: /

User-agent: Wget/1.6

Disallow: /

User-agent: WWW-Collector-E

Disallow: /

User-agent: Xenu's

Disallow: /

User-agent: Xenu's Link Sleuth 1.1c

Disallow: /

User-agent: Zeus

Disallow: /

User-agent: Zeus 32297 Webster Pro V2.9 Win32

Disallow: /

User-agent: Zeus Link Scout

Disallow: /

This blog is dedicated to methodology development of the scientific approach to computer science education and cybersecurity fields like digital forensics and information assurance. This site is not intended as a reference for practitioners of cybersecurity, but a guidance for those entering this field and would like to learn the basics of the scientific approach and methodological testing of scientific problems that is missing from a basic computer science and technology based STEM education.

Subscribe to:

Post Comments (Atom)

No comments:

Post a Comment